Techno History is a informational blog that contains the storyline of the technology evolution and its inventors.

Friday, September 24, 2010

Evolution Controls Games

Virtual museum of computing using Blender 3D

The use of technology as a means of production to represent historical facts and events is simply fascinating. Anyone who needs to teach or show historical facts and there are only 10-15 years ago only had pictures to show the objects as they were, now offers powerful 3D tools like Blender and free to represent any type of scene or object. See the great example of the Museum of Computing, University of Castilla-La Mancha. They created an interactive museum that shows the evolution of computing in an interactive manner, using 3D models of computers and video games developed throughout history.

What software did they use? Blender 3d!

Even with the virtual museum, several models of computer and videogame, are exhibited in the museum so visitors can check how many were for older computers. What virtualization then?

The virtual museum is said as it provides several interactive kiosks where visitors can literally put the computer models in the palm of the hand using an image recognition software and a special plaque. On page indicated at the beginning of this article, there is a video demonstration of these kiosks that shows very well how it works.

Now you know the best part? Staff who organized the museum, has released for download under the creative commons license, all 3d models used for interactive software, the file format of Blender 3D. Yes, to access the website of the museum you are confronted with all the computer files for download. Of course this is not a complete library of models, but for someone who needs 3D computer models for similar projects, the action is fantastic!

As everything was being used for interactive applications that use 3D real-time models are available with fewer polygons, with much of the detailing being done by textures that accompany all files of 3D models

evolution games

The first game, if we can call it that, was developed in 1962 by Slug Russell, Martin Graetz and Wayne Witanen, fellow of the Institute Ingham Massachusetts, USA. Based on prototypes of various equipment, they decided to create something useful for your spare time. Thus is born, the first electronic game, Spacewar.

After a period of ten years, the first game went on sale in the United States. It was the Odyssey, prepared by Magnavox in 1972. The Odyssey was sold in Brazil in the late 70's. Having been built by Philco and Ford, the island became known as Telejogo. This was the season "black" video game in which players had to have enough imagination, as squares on the screen meant people, ships, etc..

Shortly after the launch of the Odyssey, there is the phenomenon that all normally associated with the history of videogames: the Atari. Designed by Nolan Bushnell in 1978 and released in the United States in 1983 in Brazil, the Atari 2600 console is considered a cultural symbol of the 80s and a sales phenomenon. After the fall of Atari in the early '80s, Nintendo began to build his empire. His first sales success was the Famicom console, 8-bit, which was renamed NES (Nintendo Entertainment System). Under the NES games were made really famous, like Mario and Donkey Kong, for example.

As Nintendo grew and consolidated itself as the largest in the world of consoles, Sega, another Japanese company, also developed. To compete with the NES, the company released the Master System.

Knowing that Nintendo would not exceed 8 bits of the war, Sega developed a new console of 16 bits: the Mega Drive. The leader, Nintendo, of course entered the fray and launched one of the greatest successes in the history of the game: the Super NES. This is one of the classic episodes from the history of videogames. While the console Sega had more games, the Nintendo was more advanced and had better graphics. After all, who ever heard of the dispute between Mario and Sonic, Nintendo and Sega, respectively?

After this long dispute, comes a strong new competitor in the gaming world: Sony, giving rise also to a new generation of consoles. At this point, SEGA launched without much success its 32-bit Saturn. Nintendo surprised the world announcing the N64, with 64-bit graphics! Another important fact is Sony's Playstation, Sony, which has a large library of games, became the leader of the generation, with 100 million consoles sold.

It is recent, the last generation of video games is known even by children. The awesome Playstation 2, Sony, released in 2000, which continued the success story of the PlayStation, to read format on DVD media. The GameCube, Nintendo, being the natural successor of the N64 in 2001, and the new Xbox, the software giant Microsoft.

The latest generation of consoles is summed up in three throws of the same makers of the past generation. The dispute between the Playstation 3, Sony, Wii, Nintendo, and Xbox 360 from Microsoft, it seems that will last a long time. If Sony chose to wait (much) to launch PS3 with its futuristic graphics, Nintendo has its huge collection of old games, plus an innovative way to play. Microsoft also has extensive experience in the world of software, which can give significant advantages in this contest.

Video games are so successful today that from 1999 to 2004, the game industry earned 21 billion dollars, more than twice the revenue of all Hollywood movies in the same period.

Wi-Fi

Wi-Fi (pronounced /ˈwaɪfaɪ/) is a trademark of the Wi-Fi Alliance that manufacturers may use to brand certified products that belong to a class of wireless local area network (WLAN) devices based on the IEEE 802.11 standards. 802.11 the most widely used WLAN technology. Because of the close relationship with the underlying standards, the term Wi-Fi is often used as a synonym for IEEE 802.11 technology.

Not every IEEE 802.11-compliant device is submitted for certification to the Wi-Fi Alliance. The lack of Wi-Fi certification does not necessarily imply a device is incompatible with Wi-Fi devices.

IEEE 802.11 devices are installed in many personal computers, video game consoles, MP3 players, smartphones, printers, and other peripherals, and newer laptop computers.

Light-emitting diode - LED

A light-emitting diode (LED) (pronounced /ˌɛl iː ˈdiː/[1]) is a semiconductor light source. LEDs are used as indicator lamps in many devices, and are increasingly used for lighting. Introduced as a practical electronic component in 1962,[2] early LEDs emitted low-intensity red light, but modern versions are available across the visible, ultraviolet and infrared wavelengths, with very high brightness.

When a light-emitting diode is forward biased (switched on), electrons are able to recombine with holes within the device, releasing energy in the form of photons. This effect is called electroluminescence and the color of the light (corresponding to the energy of the photon) is determined by the energy gap of the semiconductor. An LED is often small in area (less than 1 mm2), and integrated optical components may be used to shape its radiation pattern.[3] LEDs present many advantages over incandescent light sources including lower energy consumption, longer lifetime, improved robustness, smaller size, faster switching, and greater durability and reliability. LEDs powerful enough for room lighting are relatively expensive and require more precise current and heat management than compact fluorescent lamp sources of comparable output.

Light-emitting diodes are used in applications as diverse as replacements for aviation lighting, automotive lighting (particularly brake lamps, turn signals and indicators) as well as in traffic signals. The compact size, the possibility of narrow bandwidth, switching speed, and extreme reliability of LEDs has allowed new text and video displays and sensors to be developed, while their high switching rates are also useful in advanced communications technology. Infrared LEDs are also used in the remote control units of many commercial products including televisions, DVD players, and other domestic appliances.

Liquid crystal display - LCD

A liquid crystal display (LCD) is a thin, flat electronic visual display that uses the light modulating properties of liquid crystals (LCs). LCs do not emit light directly.

They are used in a wide range of applications including: computer monitors, television, instrument panels, aircraft cockpit displays, signage, etc. They are common in consumer devices such as video players, gaming devices, clocks, watches, calculators, and telephones. LCDs have displaced cathode ray tube(CRT) displays in most applications. They are usually more compact, lightweight, portable, less expensive, more reliable, and easier on the eyes.[citation needed] They are available in a wider range of screen sizes than CRT and plasma displays, and since they do not use phosphors, they cannot suffer image burn-in.

LCDs are more energy efficient and offer safer disposal than CRTs. Its low electrical power consumption enables it to be used in battery-powered electronic equipment. It is an electronically-modulated optical device made up of any number of pixels filled with liquid crystals and arrayed in front of a light source (backlight) or reflector to produce images in colour or monochrome. The earliest discovery leading to the development of LCD technology, the discovery of liquid crystals, dates from 1888.[1] By 2008, worldwide sales of televisions with LCD screens had surpassed the sale of CRT units.

Bluetooth

Bluetooth uses a radio technology called frequency-hopping spread spectrum, which chops up the data being sent and transmits chunks of it on up to 79 bands (1 MHz each) in the range 2402-2480 MHz. This is in the globally unlicensed Industrial, Scientific and Medical (ISM) 2.4 GHz short-range radio frequency band.

In Classic Bluetooth, which is also referred to as basic rate (BR) mode, the modulation is Gaussian frequency-shift keying (GFSK). It can achieve a gross data rate of 1 Mbit/s. In extended data rate (EDR) π/4-DQPSK and 8DPSK are used, giving 2, and 3 Mbit/s respectively.

Bluetooth is a packet-based protocol with a master-slave structure. One master may communicate with up to 7 slaves in a piconet; all devices share the master's clock. Packet exchange is based on the basic clock, defined by the master, which ticks at 312.5 µs intervals. Two clock ticks make up a slot of 625 µs; two slots make up a slot pair of 1250 µs. In the simple case of single-slot packets the master transmits in even slots and receives in odd slots; the slave, conversely, receives in even slots and transmits in odd slots. Packets may be 1, 3 or 5 slots long but in all cases the master transmit will begin in even slots and the slave transmit in odd slots.

Bluetooth provides a secure way to connect and exchange information between devices such as faxes, mobile phones, telephones, laptops, personal computers, printers, Global Positioning System (GPS) receivers, digital cameras, and video game consoles.

The Bluetooth specifications are developed and licensed by the Bluetooth Special Interest Group (SIG). The Bluetooth SIG consists of more than 13,000 companies in the areas of telecommunication, computing, networking, and consumer electronics.

To be marketed as a Bluetooth device, it must be qualified to standards defined by the SIG.

Evolution of Computers

Surveys show that recently there has been a considerable increase in the number of people who have a computer at home. Few know about the process of evolution of computers, and travels to modern models of cutting edge technology of today. Following the chronological evolution of computers:

- 1946: it announced the creation of the first electronic digital computer of large-scale world, the ENIAC (Electrical Numerical Integrator and Calculator).

- 1951-1959: there are first-generation computers. They were able to calculate a speed of milliseconds, and they are programmed in machine language.

- 1959 to 1965: emerging second generation of computers, able to calculate a speed of microseconds, being programmed in assembler language.

- 1965-1975: born third generation computers. These computers are replaced by several components miniaturized and mounted onto a single chip, being able to calculate in nanoseconds, with a programming language, high-level oriented procedures.

- 1975-1981: the computers are set up the fourth generation. Following the trend of the third generation of miniaturization of components and improving its Integrated Circuit (IC). The languages used in that generation were very high level, problem-oriented.

- 1990: from that decade are released software with best quality and ability to process information more quickly.

- 2000: after the turn of the millennium the computers continued to follow the trend of miniaturization of their components, thus making the computers more flexible and practical in daily tasks. Moreover, there is a massive investment in its design.

Thursday, September 23, 2010

Internet

Although the basic applications and guidelines that make the Internet possible had existed for almost two decades, the network did not gain a public face until the 1990s. On 6 August 1991, CERN, a pan European organization for particle research, publicized the new World Wide Web project. The Web was invented by British scientist Tim Berners-Lee in 1989. An early popular web browser was ViolaWWW, patterned after HyperCard and built using the X Window System. It was eventually replaced in popularity by the Mosaic web browser. In 1993, the National Center for Supercomputing Applications at the University of Illinois released version 1.0 of Mosaic, and by late 1994 there was growing public interest in the previously academic, technical Internet. By 1996 usage of the word Internet had become commonplace, and consequently, so had its use as a synecdoche in reference to the World Wide Web.

Meanwhile, over the course of the decade, the Internet successfully accommodated the majority of previously existing public computer networks (although some networks, such as FidoNet, have remained separate). During the 1990s, it was estimated that the Internet grew by 100 percent per year, with a brief period of explosive growth in 1996 and 1997. This growth is often attributed to the lack of central administration, which allows organic growth of the network, as well as the non-proprietary open nature of the Internet protocols, which encourages vendor interoperability and prevents any one company from exerting too much control over the network. The estimated population of Internet users is 1.97 billion as of 30 June 2010.

From 2009 onward, the Internet is expected to grow significantly in Brazil, Russia, India, China, and Indonesia (BRICI countries). These countries have large populations and moderate to high economic growth, but still low Internet penetration rates. In 2009, the BRICI countries represented about 45 percent of the world's population and had approximately 610 million Internet users, but by 2015, Internet users in BRICI countries will double to 1.2 billion, and will triple in Indonesia

Meanwhile, over the course of the decade, the Internet successfully accommodated the majority of previously existing public computer networks (although some networks, such as FidoNet, have remained separate). During the 1990s, it was estimated that the Internet grew by 100 percent per year, with a brief period of explosive growth in 1996 and 1997. This growth is often attributed to the lack of central administration, which allows organic growth of the network, as well as the non-proprietary open nature of the Internet protocols, which encourages vendor interoperability and prevents any one company from exerting too much control over the network. The estimated population of Internet users is 1.97 billion as of 30 June 2010.

From 2009 onward, the Internet is expected to grow significantly in Brazil, Russia, India, China, and Indonesia (BRICI countries). These countries have large populations and moderate to high economic growth, but still low Internet penetration rates. In 2009, the BRICI countries represented about 45 percent of the world's population and had approximately 610 million Internet users, but by 2015, Internet users in BRICI countries will double to 1.2 billion, and will triple in Indonesia

Launched in Brazil the first DVDs

Due to the devaluation of Brazilian currency against the U.S. dollar and the delay in deciding the region to be adopted in Brazil, as well as other factors, the DVD became popular in Brazil in 2003, taking nearly 80% of video market. A delay of almost a year, according to manufacturers in the industry

First transistor the size of an atom

In May 1995 the American physicists David Wineland and Chris Monroe of the National Institute of Standards and Technology in Boulder, Colorado, found that sometimes the impossible happens. In a sensational experience, they managed to make an atom appear at two different points of space and the same exact moment.

That does not mean that from now on you will be able to go to two shows at the very time, but there is evidence that the atom can be here and there in one split second. In some circumstances, this is exactly how nature works. Before Wineland and Monroe, was already known that the subatomic particles were capable of such a feat, but no one had shown that the effect could reach a whole atom. Will creatures like big cats could repeat the feat? Turn the page and discover what physicists have to say about this possibility.

Quantum mechanics is the branch of physics that studies atoms on the outside and inside. Built in the early decades of the century, it is great, the most useful of all scientific theories, fired. Today, almost everything depends on it, starting with domestic appliances such as television and computers, even the most refined instruments such as radar and electronic microscopes. Even more important, his equations was first explained the reactions of chemistry and biochemistry, the functioning of the stars and the whole universe. Anyway, this century has the face of quantum mechanics, in all fairness.

But neither the people really understand what it does. "I can say no to trick me that nobody understands quantum mechanics," wrote American Richard Feynman (1918-1988), one of the most brilliant scientists of this century, known precisely for explaining difficult concepts without complications. In one of his lectures, Feynman opened the game: "Let me tell you how nature works," he said. "But avoid getting asked, 'how can you be?", Or will end in a stalemate. Nobody knows why things are so. "

Shortly after inventing the new mechanics, its creators became suspicious of what they had done. One, the Austrian Erwin Schrödinger, said in 1935 that if he were to take seriously the laws of quantum would have to believe in the undead. To illustrate the statement, he devised a thought experiment in which a cat was locked in a metal box with a glass of poison and a piece of radioactive metal. After 1 hour, what happened to the animal? The answer, Schrödinger explained, depended on the metal. Emits radiation, the glass would break and poison liquidate the cat. If not, the cat would unscathed by the trap.

First trip of the Space Shuttle - Apollo 11

The Apollo 11 space flight landed the first humans on Earth's Moon on July 20, 1969. The mission, carried out by the United States, is considered a major accomplishment in the history of exploration and represented a victory by the U.S. in the Cold War Space Race with the Soviet Union.

Launched from Florida on July 16, the third lunar mission of NASA's Apollo Program (and the only G-type mission) was crewed by Commander Neil Alden Armstrong, Command Module Pilot Michael Collins, and Lunar Module Pilot Edwin Eugene "Buzz" Aldrin, Jr. On July 20, Armstrong and Aldrin landed in the Sea of Tranquility and became the first humans to walk on the Moon. Their landing craft, Eagle, spent 21 hours and 31 minutes on the lunar surface while Collins orbited above in the command ship, Columbia. The three astronauts returned to Earth with 47.5 pounds (21.55 kilograms) of lunar rocks and landed in the Pacific Ocean on July 24.

Apollo 11 fulfilled U.S. President John F. Kennedy's goal of reaching the moon before the Soviet Union by the end of the 1960s, which he had expressed during a 1961 mission statement before the United States Congress: "I believe that this nation should commit itself to achieving the goal, before this decade is out, of landing a man on the Moon and returning him safely to the Earth."

Five additional Apollo missions landed on the Moon from 1969–1972

Released in the U.S. the first mobile phone

A mobile phone (also called mobile, cellular phone, cell phone or handphone) is an electronic device used for full duplex two-way radio telecommunications over a cellular network of base stations known as cell sites. Mobile phones differ from cordless telephones, which only offer telephone service within limited range through a single base station attached to a fixed land line, for example within a home or an office.

A mobile phone allows its user to make and receive telephone calls to and from the public telephone network which includes other mobiles and fixed line phones across the world. It does this by connecting to a cellular network owned by a mobile network operator. A key feature of the cellular network is that it enables seamless telephone calls even when the user is moving around wide areas via a process known as handoff or handover.

In addition to being a telephone, modern mobile phones also support many additional services, and accessories, such as SMS (or text) messages, email, Internet access, gaming, Bluetooth, infrared, camera, MMS messaging, MP3 player, radio and GPS. Low-end mobile phones are often referred to as feature phones, whereas high-end mobile phones that offer more advanced computing ability are referred to as smartphones.

The first handheld cellular phone was demonstrated by Martin Cooper of Motorola in 1973, using a handset weighing in at two kilos. In the year 1990, 12.4 million people worldwide had cellular subscriptions. By the end of 2009, only 20 years later, the number of mobile cellular subscriptions worldwide reached approximately 4.6 billion, 370 times the 1990 number, penetrating the developing economies and reaching the bottom of the economic pyramid.

Laser discs are released revolutionizing the music industry

The LaserDisc (LD) is a home video disc format, and was the first commercial optical disc storage medium. Initially marketed as Discovision in 1978, the technology was licensed and sold as Reflective Optical Videodisc, Laser Videodisc, Laservision, Disco-Vision, DiscoVision, and MCA DiscoVision until Pioneer Electronics purchased the majority stake in the format and marketed it as LaserDisc in the mid to late 1980s.

While the format itself produced a consistently higher quality image than its rivals, the VHS and Betamax systems, it was poorly received in North America. In Europe and Australia, it remained largely an obscure format. It was, however, much more popular in Japan and in the more affluent regions of South East Asia, such as Hong Kong and Singapore. Laserdisc was the prevalent rental video medium in Hong Kong during the 1990s.

The technology and concepts provided with the Laserdisc would become the forerunner to Compact Discs and DVDs.

Launched the first communications satellites

Westar 1 was used by Western Union for their own internal communications, such as for sending telegrams and mailgrams to Western Union bureaus and U.S. post offices respectively. It also was utilized by outside customers such as PBS, NPR and the Mutual Broadcasting System, who used it for sending television and radio programming via satellite to their local affiliate stations throughout the 1970s and '80s.

Westar 1 was retired from service in April 1983. The 15-meter dishes used to communicate with it have been decommissioned, but they can still be visited today by contacting Westar Satellite Services

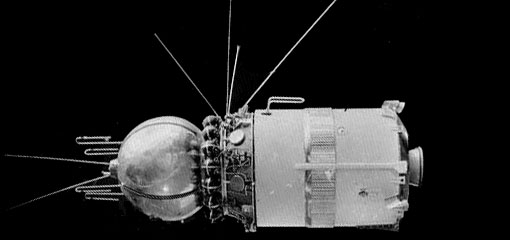

Launched on Vostok, the first spacecraft manned by humans to leave Earth's atmosphere

The Vostok (Russian: Восток, translated as East) was a type of spacecraft built by the Soviet Union's space programme for human spaceflight.

The Vostok spacecraft was originally designed for use both as a camera platform (for the Soviet Union's first spy satellite program, Zenit) and as a manned spacecraft. This dual-use design was crucial in gaining Communist Party support for the program. The basic Vostok design has remained in use for some forty years, gradually adapted for a range of other unmanned satellites. The descent module design was reused, in heavily-modified form, by the Voskhod programme.

Vannevar Bush and the invention of the computer using radio valves

Vannevar Bush (March 11, 1890 – June 28, 1974; pronounced /væˈniːvɑr/ van-NEE-var) was an American engineer and science administrator known for his work on analog computing, his political role in the development of the atomic bomb as a primary organizer of the Manhattan Project, and the idea of the memex, an adjustable microfilm-viewer which is somewhat analogous to the structure of the World Wide Web. More specifically the memex worked as a memory bank to organize and retrieve data. As Director of the Office of Scientific Research and Development, Bush coordinated the activities of some six thousand leading American scientists in the application of science to warfare.

Bush was a well-known policymaker and public intellectual during World War II and the ensuing Cold War, and was in effect the first presidential science advisor. Bush was a proponent of democratic technocracy and of the centrality of technological innovation and entrepreneurship for both economic and geopolitical security.

Seeing later developments in the Cold War arms race, Bush became troubled. "His vision of how technology could lead toward understanding and away from destruction was a primary inspiration for the postwar research that led to the development of New Media."

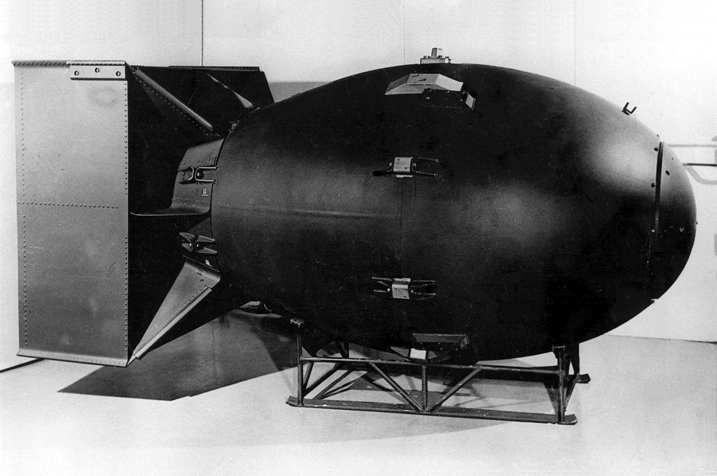

The U.S. set off in the desert of New Mexico's first atomic bomb

The history of the nuclear weapons chronicles the development of nuclear weapons. Nuclear weapons are devices that possess enormous destructive potential derived from nuclear fission or nuclear fusion reactions. Starting with the scientific breakthroughs of the 1930s which made their development possible, and continuing through the nuclear arms race and nuclear testing of the Cold War, the issues of proliferation and possible use for terrorism still remain in the early 21st century.

The first fission weapons, also known as "atomic bombs," were developed jointly by the United States, Britain and Canada during World War II in what was called the Manhattan Project to counter the assumed Nazi German atomic bomb project. In August 1945 two were dropped on Japan ending the Pacific War. An international team was dispatched to help work on the project. The Soviet Union started development shortly thereafter with their own atomic bomb project, and not long after that both countries developed even more powerful fusion weapons called "hydrogen bombs."

During the Cold War, the Soviet Union and United States each acquired nuclear weapons arsenals numbering in the thousands, placing many of them onto rockets which could hit targets anywhere in the world. Currently there are at least nine countries with functional nuclear weapons. A considerable amount of international negotiating has focused on the threat of nuclear warfare and the proliferation of nuclear weapons to new nations or groups.

There have been (at least) four major false alarms, the most recent in 1995, that resulted in the activation of either the US's or Russia's nuclear attack early warning protocols.

The Japanese company launches the Motorola walkie-talkie

For the album by Air, see Talkie Walkie. For the building in the City of London, see 20 Fenchurch Street. For Taiwanese band, see Walkie Talkie (band).

Recreational, toy and amateur radio walkie talkies.

A Picture of two consumer-grade walkie-talkies (PMR446-type).A walkie-talkie, or handie talkie, (more formally known as a handheld transceiver) is a hand-held, portable, two-way radio transceiver. Its development during the Second World War has been variously credited to Donald L. Hings, radio engineer Alfred J. Gross, and engineering teams at Motorola. Similar designs were created for other armed forces, and after the war, walkie-talkies spread to public safety and eventually commercial and jobsite work. Major characteristics include a half-duplex channel (only one radio transmits at a time, though any number can listen) and a "push-to-talk" (P.T.T) switch that starts transmission. Typical walkie-talkies resemble a telephone handset, possibly slightly larger but still a single unit, with an antenna sticking out of the top. Where a phone's earpiece is only loud enough to be heard by the user, a walkie-talkie's built-in speaker can be heard by the user and those in the user's immediate vicinity. Hand-held transceivers may be used to communicate between each other, or to vehicle-mounted or base stations.

Frank Whittle and the invention of the the first British aircraft to fly with a turbojet engine

Air Commodore Sir Frank Whittle, OM, KBE, CB, FRS, Hon FRAeS (1 June 1907 – 9 August 1996) was a British Royal Air Force (RAF) engineer officer. Sharing credit with Germany's Dr. Hans von Ohain for independently inventing the jet engine (though some years earlier than Dr. von Ohain), he is hailed as the father of jet propulsion.

From an early age Whittle demonstrated an aptitude for engineering and an interest in flying. Determined to be a pilot, he overcame his physical limitations to be accepted into the RAF, where his abilities earned him a place on the officer training course at Cranwell. He excelled in his studies and became an accomplished pilot. While writing his thesis there he formulated the fundamental concepts that led to the creation of the jet engine, taking out a patent on his design in 1930. His performance on an officers' engineering course earned him a place on a further course at the University of Cambridge where he graduated with a First.

Without Air Ministry support, he and two retired RAF servicemen formed Power Jets Ltd to build his engine with assistance from the firm of British Thomson-Houston. Despite limited funding, a prototype was created, which first ran in 1937. Official interest was forthcoming following this success, with contracts being placed to develop further engines, but the continuing stress seriously affected Whittle's health, eventually resulting in a nervous breakdown in 1940. In 1944 when Power Jets was nationalised he again suffered a nervous breakdown, and resigned from the board in 1946.

In 1948 Whittle retired from the RAF and received a knighthood. He joined BOAC as a technical advisor before working as an engineering specialist in one of Shell Oil's subsidiaries followed by a position with Bristol Aero Engines. After emigrating to the U.S. in 1976 he accepted the position of NAVAIR Research Professor at the United States Naval Academy from 1977–1979. In August 1996, Whittle died of lung cancer at his home in Columbia, Maryland

Alberto Santos-Dumont and the invention of the airplane

Alberto Santos-Dumont (July 20, 1873 – July 23, 1932) was a Brazilian early pioneer of aviation. Heir of a prosperous coffee producer family, Santos Dumont dedicated himself to science studies in Paris, France, where he spent most of his adult life.

Santos Dumont designed, built and flew the first practical dirigible balloons. In doing so he became the first person to demonstrate that routine, controlled flight was possible. This "conquest of the air", in particular winning the Deutsch de la Meurthe prize on October 19, 1901 on a flight that rounded the Eiffel Tower, made him one of the most famous people in the world during the early 20th century.

In addition to his pioneering work in airships, Santos-Dumont made the first European public flight of an airplane on October 23, 1906. Designated 14-bis or Oiseau de proie (French for "bird of prey"), the flying machine was the first fixed-wing aircraft witnessed by the European press and French aviation authorities to take off and successfully fly. Santos Dumont is considered the "Father of Aviation" in Brazil, his native country.His flight is the first to have been certified by the Aéro Club de France and the Fédération Aéronautique Internationale (FAI).

Santos-Dumont also occupied the 38th chair of the Brazilian Academy of Letters, from 1931 until his death in 1932.

John Ambrose Fleming and the invention of the thermionic valve

Sir John Ambrose Fleming (29 November 1849 – 18 April 1945) was an English electrical engineer and physicist. He is known for inventing the first thermionic valve or vacuum tube, the diode, then called the kenotron in 1904.[1] He also invented the right-hand rule, used in mathematics and electronics.[2] He was born the eldest of seven children of James Fleming DD (died 1879), a Congregational minister, and his wife, Mary Ann, at Lancaster, Lancashire and baptized on 11 February 1850. He was a devout Christian and preached on one occasion at St Martin-in-the-Fields in London on the topic of evidence for the resurrection. In 1932, along with Douglas Dewar and Bernard Acworth, he helped establish the Evolution Protest Movement. Having no children, he bequeathed much of his estate to Christian charities, especially those that helped the poor. He was an accomplished photographer and, in addition, he painted watercolours and enjoyed climbing in the Alps.

The Wright brothers and the first ride on a plane

The Wright brothers, Orville (August 19, 1871 – January 30, 1948) and Wilbur (April 16, 1867 – May 30, 1912), were two Americans who are generally credited with inventing and building the world's first successful airplane and making the first controlled, powered and sustained heavier-than-air human flight, on December 17, 1903. In the two years afterward, the brothers developed their flying machine into the first practical fixed-wing aircraft. Although not the first to build and fly experimental aircraft, the Wright brothers were the first to invent aircraft controls that made fixed-wing powered flight possible.

The brothers' fundamental breakthrough was their invention of three-axis control, which enabled the pilot to steer the aircraft effectively and to maintain its equilibrium. This method became standard and remains standard on fixed-wing aircraft of all kinds. From the beginning of their aeronautical work, the Wright brothers focused on unlocking the secrets of control to conquer "the flying problem", rather than developing more powerful engines as some other experimenters did. Their careful wind tunnel tests produced better aeronautical data than any before, enabling them to design and build wings and propellers more effective than any before. Their U.S. patent 821,393 claims the invention of a system of aerodynamic control that manipulates a flying machine's surfaces.

They gained the mechanical skills essential for their success by working for years in their shop with printing presses, bicycles, motors, and other machinery. Their work with bicycles in particular influenced their belief that an unstable vehicle like a flying machine could be controlled and balanced with practice. From 1900 until their first powered flights in late 1903, they conducted extensive glider tests that also developed their skills as pilots. Their bicycle shop employee Charlie Taylor became an important part of the team, building their first aircraft engine in close collaboration with the brothers.

The Wright brothers' status as inventors of the airplane has been subject to counter-claims by various parties. Much controversy persists over the many competing claims of early aviators.

Guglielmo Marconi and the invention of the radio

Guglielmo Marconi (Italian pronunciation: [ɡuʎˈʎɛːlmo marˈkoːni]; 25 April 1874– 20 July 1937) was an Italian inventor, best known for his development of a radio telegraph system, which served as the foundation for the establishment of numerous affiliated companies worldwide. He shared the 1909 Nobel Prize in Physics with Karl Ferdinand Braun "in recognition of their contributions to the development of wireless telegraphy" and was ennobled in 1924 as Marchese Marconi.

Thomas Alva Edison and the invention of the lamp

Thomas Alva Edison (February 11, 1847 – October 18, 1931) was an American inventor, scientist, and businessman who developed many devices that greatly influenced life around the world, including the phonograph, the motion picture camera, and a long-lasting, practical electric light bulb. Dubbed "The Wizard of Menlo Park" (now Edison, New Jersey) by a newspaper reporter, he was one of the first inventors to apply the principles of mass production and large teamwork to the process of invention, and therefore is often credited with the creation of the first industrial research laboratory. Edison's Menlo Park laboratory complex is said to live on in California's "invention factory" at Silicon Valley.[1]

Edison is considered one of the most prolific inventors in history, holding 1,093 U.S. patents in his name, as well as many patents in the United Kingdom, France, and Germany. He is credited with numerous inventions that contributed to mass communication and, in particular, telecommunications. These included a stock ticker, a mechanical vote recorder, a battery for an electric car, electrical power, recorded music and motion pictures.His advanced work in these fields was an outgrowth of his early career as a telegraph operator. Edison originated the concept and implementation of electric-power generation and distribution to homes, businesses, and factories – a crucial development in the modern industrialized world. His first power station was on Manhattan Island, New York.

Alexander Graham Bell and the invention of the telephone

Alexander Graham Bell (March 3, 1847 – August 2, 1922) was an eminent scientist, inventor, engineer and innovator who is generally credited with inventing the first practical telephone.

Bell's father, grandfather, and brother had all been associated with work on elocution and speech, and both his mother and wife were deaf, profoundly influencing Bell's life's work.[1] His research on hearing and speech further led him to experiment with hearing devices which eventually culminated in Bell being awarded the first U.S. patent for the telephone in 1876.[N 1] In retrospect, Bell considered his most famous invention an intrusion on his real work as a scientist and refused to have a telephone in his study.[3]

Many other inventions marked Bell's later life, including groundbreaking work in optical telecommunications, hydrofoils and aeronautics. In 1888, Alexander Graham Bell became one of the founding members of the National Geographic Society.[4]

Jean Joseph Étienne Lenoir (January 12, 1822 - August 4, 1900) was a Belgian engineer who developed the an internal combustion engine in 1859. Prior designs for such engines were patented as early as 1807, but none were commercially successful. Lenoir's engine was commercialized in sufficient quantities to be considered a success, a first for the internal combustion engine.

He was born in Mussy-la-Ville (then in Luxembourg, part of Belgium from 1839). By the early 1850s he had emigrated to France, taking up residence in Paris, where he developed an interest in electroplating. His interest in the subject led him to make electrical inventions including an improved electric telegraph.

Louis-Jacques-Mandé aand the invention of the photography

Louis-Jacques-Mandé Daguerre (November 18, 1787 – July 10, 1851) was a French artist and chemist, recognized for his invention of the daguerreotype process of photography.

Alessandro Giuseppe and the invention of the eletric cell

Count Alessandro Giuseppe Antonio Anastasio Volta (18 February 1745 – 5 March 1827) was an Italian physicist known especially for the development of the first electric cell in 1800.

Thomas Newcomen (born shortly before 24 February 1664;[1] died 5 August 1729) was an ironmonger by trade and a Baptist lay preacher by calling. He was born in Dartmouth, Devon, England, near a part of the country noted for its tin mines. Flooding was a major problem, limiting the depth at which the mineral could be mined. Newcomen created the first practical steam engine for pumping water, the Newcomen steam engine. Consequently, he can be regarded as a forefather of the Industrial Revolution.

Jhon Floyer and the invention of the pulse watch

Floyer was best known for introducing the practice of pulse rate measurement, and creating a special watch for this purpose.

Evangelista Torricelli and the invention of the barometer

Evangelista Torricelli ( pronunciation (help·info); October 15, 1608 – October 25, 1647) was an Italian physicist and mathematician, best known for his invention of the barometer.

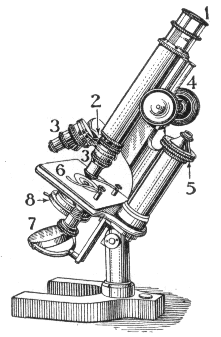

Sacharias Jansen and the invention of the microscope

Sacharias Jansen (c. 1580 - c. 1638) was a Dutch spectacle-maker from Middelburg credited with inventing, or contributing advances towards the invention of the first telescope. Jansen is sometimes credited for inventing the first truly compound microscope. However, the origin of the microscope, just like the origin of the telescope, is a matter of debate.

His name is often written as Zacharias Jansen or Zacharias Janssen, but as Dutch scientific literature writes the name as Sacharias Jansen, that way of writing it is also used in this article.

In 2008, the Netherlands commemorated the 400th anniversary of the telsescope, honoring Jansen as one of the two possible inventors, the other being Hans Lippershey.

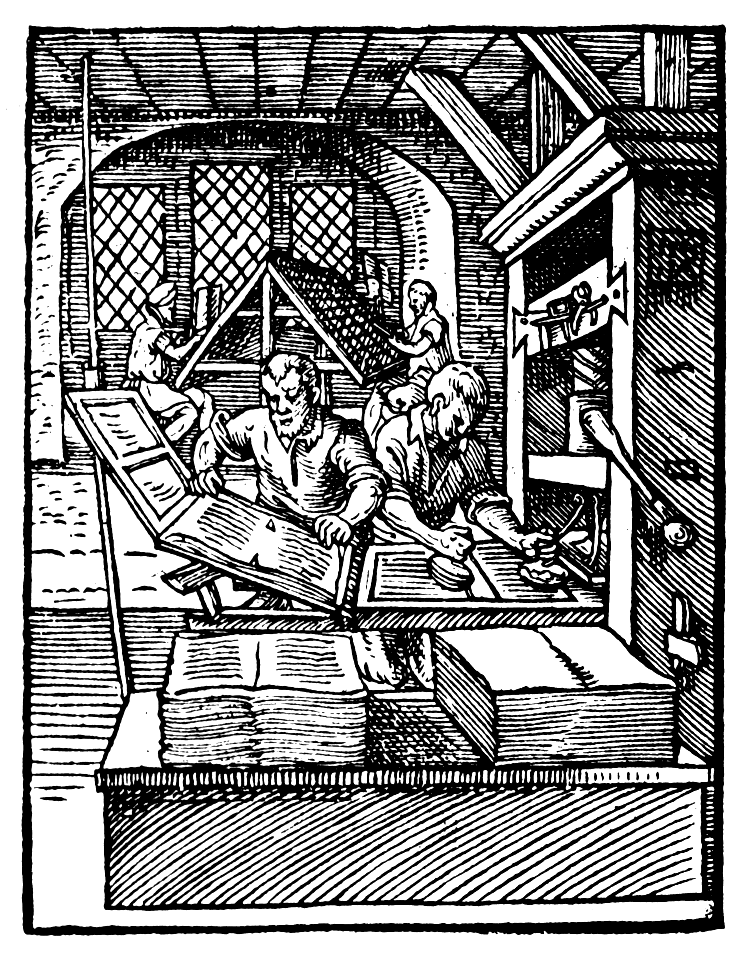

João Gutenberg and the invention of the book printer

Johannes Gensfleisch zur Laden zum Gutenberg (c. 1398 – February 3, 1468) was a German goldsmith and printer who introduced modern book printing. His invention of mechanical movable type printing started the Printing Revolution and is widely regarded as the most important event of the modern period.[1] It played a key role in the development of the Renaissance, Reformation and the Scientific Revolution and laid the material basis for the modern knowledge-based economy and the spread of learning to the masses.[2]

Gutenberg was the first European to use movable type printing, in around 1439, and the global inventor of the printing press. Among his many contributions to printing are: the invention of a process for mass-producing movable type; the use of oil-based ink; and the use of a wooden printing press similar to the agricultural screw presses of the period. His truly epochal invention was the combination of these elements into a practical system which allowed the mass production of printed books and was economically viable for printers and readers alike. Gutenberg's method for making type is traditionally considered to have included a type metal alloy and a hand mould for casting type.

The use of movable type was a marked improvement on the handwritten manuscript, which was the existing method of book production in Europe, and upon woodblock printing, and revolutionized European book-making. Gutenberg's printing technology spread rapidly throughout Europe and later the world.

His major work, the Gutenberg Bible (also known as the 42-line Bible), has been acclaimed for its high aesthetic and technical quality.

Subscribe to:

Comments (Atom)